服务编排

飞牛OS着实不太稳定,很多一开始用的好好的东西,在更新后就挂了,比如折腾了一下午的影视App;加上这个系统的日志做的实在是糟糕至极,很难在出现问题时知道为什么出现了问题,所以需要不断地重装重装,这使得部署在上面的服务每次重装都需要重新去部署,非常的麻烦,因此我将一部分服务从上面迁移出来,在另一个LXC上部署。

整体的服务编排与香港VPS服务编排的思路差不多,都是一个compose.yml作为核心,然后在子文件中写服务的compose配置。

Gitea

配置SSH直通

首先需要参考Gitea SSH直通的文章;我们需要先创建一个git用户并且知道其uid和gid:

Service-Component:~$ id

uid=1000(git) gid=1000(git) groups=1000(git)接下来我们先切换到git用户来创建一个公私密钥:

git ssh-keygen -t rsa -b 4096 -C "Gitea Host Key"完成后在/home/git/.ssh/下应该会有id_rsa和id_rsa.pub,接下来将公钥写入到authorized_keys:

echo "$(cat /home/git/.ssh/id_rsa.pub)" >> /home/git/.ssh/authorized_keys然后创建一个/usr/local/bin/gitea文件,向其中写入一下内容:

ssh -p 50022 -o StrictHostKeyChecking=no git@127.0.0.1 "SSH_ORIGINAL_COMMAND=\"$SSH_ORIGINAL_COMMAND\" $0 $@"完成后注意需要添加执行权限。

请注意,这里使用了50022端口作为Gitea的ssh映射端口。

到这里基本上就配置好了SSH直通的配置了。

配置Gitea容器

接下来启动Gitea:

services:

gitea:

container_name: gitea

image: "docker.gitea.com/gitea:1.23.7"

volumes:

- ./gitea:/data

- /etc/timezone:/etc/timezone:ro

- /etc/localtime:/etc/localtime:ro

- /home/git/.ssh:/data/git/.ssh

restart: always

environment:

- USER_UID=1000

- USER_GID=1000

- APP_NAME=Gitea

- DOMAIN=gitea.evalexp.top

- SSH_DOMAIN=gitea.evalexp.top

- SSH_PORT=22

networks:

- app-net

ports:

- "127.0.0.1:50022:22"

labels:

- "traefik.enable=true"

- "traefik.http.routers.gitea.rule=Host(`gitea.evalexp.top`) || Host(`gitea`) || Host(`gitea.home.net`)"

- "traefik.http.routers.gitea.service=gitea"

- "traefik.http.services.gitea.loadbalancer.server.port=3000"

- "traefik.http.routers.gitea.entrypoints=https"

- "traefik.http.routers.gitea.tls=true"

请注意,这里的USER_UID和USER_GID务必和前面的git用户的uid和gid对应。

配置Runner

LXC配置

考虑到Runner可能需求的性能比较大,从此步开始,不要在FnOS中启动容器了。

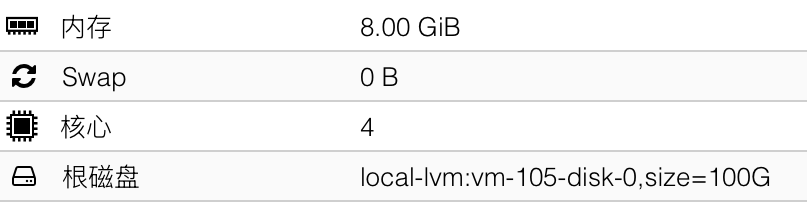

首先到PVE中创建一个LXC容器,选择Alpine镜像并且勾选嵌套即可;我这里分配了4C8G + 100GSSD的配置:

由于Runner本身就使用了Docker,这里我们也采用Docker来部署Runner;首先参考Alpine的文章,安装好Docker并设置开机启动。

向/etc/sysctl.conf中写入内容:

net.ipv6.conf.all.disable_ipv6=1

net.ipv6.conf.default.disable_ipv6=1

net.ipv6.conf.lo.disable_ipv6=1然后启用sysctl服务:

rc-update add sysctl default接下来配置Runner容器。

Runner配置

然后创建一下Runner配置:

docker run --entrypoint="" --rm -it docker.io/gitea/act_runner:latest act_runner generate-config > config.yaml然后编辑文件,配置缓存相关:

cache:

# Enable cache server to use actions/cache.

enabled: true

# The directory to store the cache data.

# If it's empty, the cache data will be stored in $HOME/.cache/actcache.

dir: ""

# The host of the cache server.

# It's not for the address to listen, but the address to connect from job containers.

# So 0.0.0.0 is a bad choice, leave it empty to detect automatically.

host: "192.168.31.51"

# The port of the cache server.

# 0 means to use a random available port.

port: 8088

# The external cache server URL. Valid only when enable is true.

# If it's specified, act_runner will use this URL as the ACTIONS_CACHE_URL rather than start a server by itself.

# The URL should generally end with "/".

external_server: ""注意这里的host填入当前LXC的地址;8088用于后面的Docker映射端口。

再到Gitea的管理后台中,在Actions下的Runner,右上角有个创建Runner,复制这里的Registration Token;然后填入下面的配置:

services:

runner:

image: gitea/act_runner

environment:

CONFIG_FILE: /config.yaml

GITEA_INSTANCE_URL: "${INSTANCE_URL}"

GITEA_RUNNER_REGISTRATION_TOKEN: "${REGISTRATION_TOKEN}"

GITEA_RUNNER_NAME: "${RUNNER_NAME}"

GITEA_RUNNER_LABELS: "${RUNNER_LABELS}"

ports:

- 8088:8088

volumes:

- ./config.yaml:/config.yaml

- ./data:/data

- /var/run/docker.sock:/var/run/docker.sock可供参考的配置:

services:

runner:

image: gitea/act_runner

environment:

CONFIG_FILE: /config.yaml

GITEA_INSTANCE_URL: "https://gitea.evalexp.top"

GITEA_RUNNER_REGISTRATION_TOKEN: "${REGISTRATION_TOKEN}"

GITEA_RUNNER_NAME: "Runner-Node-1"

GITEA_RUNNER_LABELS: ""

ports:

- 8088:8088

volumes:

- ./config.yaml:/config.yaml

- ./data:/data

- /var/run/docker.sock:/var/run/docker.sock启动,然后应该就能在Gitea中查看到对应的Runner了。

Home-Assistant

配置文件:

services:

home-assistant:

container_name: home-assistant

image: "ghcr.io/home-assistant/home-assistant:stable"

volumes:

- ./config:/config

- /etc/localtime:/etc/localtime:ro

- /run/dbus:/run/dbus:ro

restart: always

privileged: true

networks:

- app-net

labels:

- "traefik.enable=true"

- "traefik.http.routers.ha.rule=Host(`ha.home.evalexp.top`) || Host(`ha`) || Host(`ha.home.net`)"

- "traefik.http.routers.ha.service=ha"

- "traefik.http.services.ha.loadbalancer.server.port=8123"

- "traefik.http.routers.ha.entrypoints=https"

- "traefik.http.routers.ha.tls=true"Sun-Panel

配置文件:

services:

sun-panel:

image: "hslr/sun-panel:latest"

container_name: sun-panel

volumes:

- ./conf:/app/conf

# - /var/run/docker.sock:/var/run/docker.sock # 挂载docker.sock

# - ./runtime:/app/runtime # 挂载日志目录

# - /mnt/sata1-1:/os # 硬盘挂载点(根据自己需求修改)

# ports:

# - 3002:3002

restart: always

networks:

- app-net

labels:

- "traefik.enable=true"

- "traefik.http.routers.sun-panel.rule=Host(`nav.home.evalexp.top`) || Host(`nav`) || Host(`nav.home.net`)"

- "traefik.http.routers.sun-panel.service=sun-panel"

- "traefik.http.services.sun-panel.loadbalancer.server.port=3002"

- "traefik.http.routers.sun-panel.entrypoints=https"

- "traefik.http.routers.sun-panel.tls=true"DDNS-GO

配置文件:

services:

ddns-go:

image: ghcr.io/jeessy2/ddns-go

restart: always

container_name: ddns-go

network_mode: host

volumes:

- ./data:/root然后访问该机器IP的9876端口,即可到WEBUI,注意取消勾选IPv4,然后启用IPv6,添加一个DNSPod的AK/SK后就可以填域名了:

注意禁止公网访问打开,密码尽可能设置复杂一点。

Navidrome

配置文件如下:

services:

navidrome:

image: deluan/navidrome:latest

user: 1000:1000 # should be owner of volumes

restart: no

networks:

- app-net

environment:

ND_SCANSCHEDULE: 24h

volumes:

- "./data:/data"

- "/mnt/nfs/Media/Music:/music:ro"

labels:

- "traefik.enable=true"

- "traefik.http.routers.navidrom.rule=Host(`music.home.evalexp.top`) || Host(`music`) || Host(`music.home.net`)"

- "traefik.http.routers.navidrom.service=navidrom"

- "traefik.http.services.navidrom.loadbalancer.server.port=4533"

- "traefik.http.routers.navidrom.entrypoints=https"

- "traefik.http.routers.navidrom.tls=true"

注意这里使用的是restart: no,这在重启机器后不会自动重启此容器,我们需要自定义一个rc的启动脚本来检测NFS挂载情况再启动此服务。

NFS的挂载可以参考:Alpine 挂载NFS

注意这里的服务组件是一个LXC容器,因此它几乎没有任何引导时间,我们这里的rc脚本容易出现的坑点是,没有依赖Docker服务,导致脚本在Docker就绪前就尝试拉起容器,这会直接失败,创建/etc/init.d/auto-start-navidrome:

#!/sbin/openrc-run

description="Auto start navidrome"

depend() {

need mount-nfs docker

use logger

after firewall

}

LOGFILE="/var/log/navidrome.log"

start() {

while [ ! -f '/mnt/nfs/Media/.nfs' ]; do

echo "[$(date)] Waiting for Media mount..." >> "$LOGFILE"

sleep 5

done

docker compose -f /root/AppCenter/compose.yml start navidrome

echo "[$(date)] Navidrome started" >> "$LOGFILE"

}

stop() {

docker compose -f /root/AppCenter/compose.yml stop navidrome

echo "[$(date)] Navidrome stopped" >> "$LOGFILE"

}这里的

.nfs文件需要自己创建,用于NFS挂载是否成功的检测

然后添加自启动:rc-update add auto-start-navidrome default

Headscale

Tailscale在某个版本后就开始抽风,隔一段时间就会

You are logged out的提示,导致使用官方的服务体验很差;所以这里切换为自己搭建的Headscale了。

由于使用IPv6进行P2P,这里直接选择使用host网络,这个服务最好在专属容器中启用,避免出现问题无法回家。

配置如下:

services:

headscale:

image: ghcr.io/tailscale/tailscale:latest

container_name: headscale

volumes:

- ./data:/var/lib/tailscale

restart: always

network_mode: host

environment:

- TS_HOSTNAME=subnet-router

- TS_STATE_DIR=/var/lib/tailscale

- TS_AUTHKEY=???

- TS_ROUTES=192.168.31.0/24

- TS_EXTRA_ARGS=--login-server=https://headscale.evalexp.top如果使用官方的tailscale,那么Compose文件如下:

services:

tailscale:

image: ghcr.io/tailscale/tailscale:latest

container_name: tailscale

volumes:

- ./data:/var/lib/tailscale

restart: always

network_mode: host

environment:

- TS_HOSTNAME=subnet-router

- TS_STATE_DIR=/var/lib/tailscale

- TS_AUTHKEY=tskey-auth-???

- TS_ROUTES=192.168.31.0/24Portainer

配置如下:

services:

portainer:

image: portainer/portainer-ce

container_name: portainer

restart: always

networks:

- app-net

volumes:

- ./data:/data

- /var/run/docker.sock:/var/run/docker.sock

- /var/lib/docker/volumes:/var/lib/docker/volumes

labels:

- "traefik.enable=true"

- "traefik.http.routers.portainer.rule=Host(`portainer.home.evalexp.top`) || Host(`portainer`) || Host(`portainer.home.net`)"

- "traefik.http.routers.portainer.service=portainer"

- "traefik.http.services.portainer.loadbalancer.server.port=9000"

- "traefik.http.routers.portainer.entrypoints=https"

- "traefik.http.routers.portainer.tls=true"Next-Terminal

Docker Compose配置

由于每次运维都需要进入到PVE中,其实这还是有一点不方便的;所以还是决定搭建一个堡垒机,Jumpserver对于AIO来说似乎太过重型,因此我这里选择了稍微不那么重型的Next-Terminal,给出核心搭建配置:

services:

nt-guacd:

container_name: nt-guacd

image: registry.cn-beijing.aliyuncs.com/dushixiang/guacd:latest

networks:

- nt-net

volumes:

- ./data:/usr/local/next-terminal/data

restart: always

nt-postgresql:

container_name: nt-postgresql

image: registry.cn-beijing.aliyuncs.com/dushixiang/postgres:16.4

environment:

POSTGRES_DB: next-terminal

POSTGRES_USER: next-terminal

POSTGRES_PASSWORD: next-terminal

volumes:

- ./data/postgresql:/var/lib/postgresql/data

networks:

- nt-net

restart: always

next-terminal:

container_name: next-terminal

image: registry.cn-beijing.aliyuncs.com/dushixiang/next-terminal:latest

networks:

- app-net

- nt-net

#ports:

# - "8088:8088" # Web管理界面

# - "2022:2022" # SSH Server 端口 (可选)

volumes:

# - /etc/localtime:/etc/localtime:ro

- ./data:/usr/local/next-terminal/data

- ./logs:/usr/local/next-terminal/logs

- ./config.yaml:/etc/next-terminal/config.yaml

depends_on:

- nt-postgresql

- nt-guacd

restart: always

labels:

- "traefik.enable=true"

- "traefik.http.routers.jump.rule=Host(`jump.evalexp.top`) || Host(`jump`) || Host(`jump.home.net`)"

- "traefik.http.routers.jump.service=jump"

- "traefik.http.services.jump.loadbalancer.server.port=8088"

- "traefik.http.routers.jump.entrypoints=https"

- "traefik.http.routers.jump.tls=true"

- "traefik.http.routers.jump.middlewares=inner-ipwhitelist@file"

networks:

nt-net:注意这里的数据库和guacd不要接入到app-net中,应该使用独立的网络接入。

应用配置

以及对应的配置:

Database:

Enabled: true

Type: postgres

Postgres:

Hostname: nt-postgresql

Port: 5432

Username: next-terminal

Password: next-terminal

Database: next-terminal

ShowSql: false

log:

Level: debug # 日志等级 debug,info,waring,error

Filename: ./logs/nt.log

Server:

Addr: "0.0.0.0:8088"

App:

Website:

AccessLog: "./logs/access.log" # web 资产的访问日志路径

Recording:

Type: "local" # 录屏文件存储位置,可选 local, s3

Path: "/usr/local/next-terminal/data/recordings"

Guacd:

Drive: "/usr/local/next-terminal/data/drive"

Hosts:

- Hostname: nt-guacd

Port: 4822

Weight: 1

# 反向代理配置,详情参考 https://docs.next-terminal.typesafe.cn/install/config-desc.html

ReverseProxy:

Enabled: false # 是否启用反向代理

HttpEnabled: true # 是否启用 http 反向代理

HttpAddr: ":80" # http 监听地址

HttpRedirectToHttps: false # 是否强制 http 访问转为 https

HttpsEnabled: true # 是否启用 https 反向代理

HttpsAddr: ":443" # https 监听地址

SelfProxyEnabled: true # 是否启用自代理

SelfDomain: "nt.yourdomain.com" # 自代理域名,SelfProxyEnabled 为 true 时生效

Root: "" # 系统根路径,SelfProxyEnabled 为 flase 时生效。示例:https://nt.yourdomain.com

IpExtractor: "direct" # ip 提取方式,可选 direct, x-forwarded-for, x-real-ip

IpTrustList: # 信任的IP地址列表

- "0.0.0.0/0"Traefik额外配置

可以注意到上面的Docker compose配置中使用了一个middleware叫inner-ipwhitelist@file,这一个需要说明,核心原因和DNS解析中说的一致,由于堡垒机也接入到了可被公网访问到的Traefik,因此可能被Host碰撞从而在外网访问到堡垒机,这是不安全的;因此需要添加中间件,仅允许其从内网访问。

该中间件的Traefik的Dynamic配置:

http:

middlewares:

inner-ipwhitelist:

ipWhiteList:

sourceRange:

- "192.168.0.0/16"