服务编排

整体考虑编排结构如下:

├── app

│ ├── compose.yml

│ ├── serviceA

│ ├── serviceB这里的compose.yml作为核心,将serviceA和serviceB的docker-compose.yml使用include加入;基础的compose.yml如下:

include:

# 注意后面添加的服务需要在这里添加进来

networks:

app_net:

ipam:

config:

- subnet: "172.20.10.0/24"Router - Traefik

Blog

直接通过label让Traefik自动反向代理:

services:

blog:

image: registry.cn-shanghai.aliyuncs.com/evalexp-private/blog:latest

restart: always

hostname: blog.evalexp.top

container_name: blog

networks:

app_net:

logging:

options:

max-size: "5m"

labels:

- "traefik.enable=true"

- "traefik.http.routers.blog.rule=Host(`blog.evalexp.top`) || Host(`evalexp.top`)"

- "traefik.http.routers.blog.service=blog"

- "traefik.http.services.blog.loadbalancer.server.port=80"

- "traefik.http.routers.blog.entrypoints=https"

- "traefik.http.routers.blog.tls=true"

不用额外的配置了。

Nextcloud

尽管Nextcloud显得比较重型,但是在OCIS升级后,WebDav的支持突然变得非常的差,在TrueNAS中能正常使用WebDav进行主动备份,但是在部分的软件中无法使用WebDav的AppToken登录到文件系统,因此还是切换回NextCloud。

配置相关

Nextcloud最好的就是文档齐全,并且docker启动非常丝滑;直接给出配置:

services:

nextcloud:

image: nextcloud:apache

container_name: nextcloud

restart: unless-stopped

volumes:

- /datapool/encryption/nextcloud_data:/var/www/html

environment:

- TZ=Asia/Shanghai

- MYSQL_HOST=nextcloud-db

- MYSQL_DATABASE=nextcloud

- MYSQL_USER=nextcloud

- MYSQL_PASSWORD=nextcloud

- REDIS_HOST=nextcloud-redis

- REDIS_HOST_PORT=6379

- OVERWRITECLIURL=https://nextcloud.evalexp.top

- OVERWRITEPROTOCOL=https

- OVERWRITEHOST=nextcloud.evalexp.top

- TRUSTED_PROXIES=172.20.10.0/24

extra_hosts:

- "nextcloud.evalexp.top:43.161.215.97"

networks:

- app_net

- nextcloud_net

depends_on:

- nextcloud-db

labels:

- "traefik.enable=true"

- "traefik.http.routers.nextcloud.rule=Host(`nextcloud.evalexp.top`)"

- "traefik.http.routers.nextcloud.entrypoints=https"

- "traefik.http.routers.nextcloud.tls=true"

- "traefik.http.routers.nextcloud.service=nextcloud"

- "traefik.http.services.nextcloud.loadbalancer.server.port=80"

- "traefik.http.middlewares.nc-secure-headers.headers.stsSeconds=15552000"

- "traefik.http.middlewares.nc-secure-headers.headers.stsIncludeSubdomains=true"

- "traefik.http.middlewares.nc-secure-headers.headers.stsPreload=true"

- "traefik.http.middlewares.nc-secure-headers.headers.forceSTSHeader=true"

- "traefik.http.routers.nextcloud.middlewares=nc-secure-headers@docker"

nextcloud-cron:

image: nextcloud:apache

container_name: nextcloud-cron

restart: unless-stopped

volumes:

- /datapool/encryption/nextcloud_data:/var/www/html

networks:

- nextcloud_net

environment:

- TZ=Asia/Shanghai

- MYSQL_HOST=nextcloud-db

- MYSQL_DATABASE=nextcloud

- MYSQL_USER=nextcloud

- MYSQL_PASSWORD=nextcloud

- REDIS_HOST=nextcloud-redis

- REDIS_HOST_PORT=6379

- OVERWRITECLIURL=https://nextcloud.evalexp.top

- OVERWRITEPROTOCOL=https

- OVERWRITEHOST=nextcloud.evalexp.top

- TRUSTED_PROXIES=172.20.10.0/24

entrypoint: /cron.sh

depends_on:

- nextcloud-db

nextcloud-db:

image: mariadb:10.11

container_name: nextcloud-db

restart: unless-stopped

command: --transaction-isolation=READ-COMMITTED --binlog-format=ROW

networks:

- nextcloud_net

extra_hosts:

- "nextcloud.evalexp.top:43.161.215.97"

volumes:

- nextcloud_db_data:/var/lib/mysql

environment:

- MYSQL_ROOT_PASSWORD=root_nextcloud

- MYSQL_DATABASE=nextcloud

- MYSQL_USER=nextcloud

- MYSQL_PASSWORD=nextcloud

- TZ=Asia/Shanghai

nextcloud-redis:

image: redis:alpine

container_name: nextcloud-redis

restart: unless-stopped

networks:

- nextcloud_net

command: redis-server --save "" --appendonly no

networks:

nextcloud_net:

volumes:

nextcloud_db_data:修复相关

在安装完成后,其实还是会有一点问题,比如维护窗口啊,索引缺失等问题,因此可以写一个脚本放在nextcloud的服务里,方便快速修复:

#!/bin/sh

set -e

# 计算 compose.yml 路径:脚本所在目录的上一级

SCRIPT_DIR="$(cd "$(dirname "$0")" && pwd)"

COMPOSE_FILE="${SCRIPT_DIR}/../compose.yml"

# Nextcloud 服务名(docker compose service name)

NEXTCLOUD_SERVICE="nextcloud"

echo "Using compose file: ${COMPOSE_FILE}"

# 封装 occ 执行函数

occ() {

docker compose -f "${COMPOSE_FILE}" exec -u www-data "${NEXTCLOUD_SERVICE}" php occ "$@"

}

echo "==> Setting maintenance window to 03:00 (UTC+8 -> UTC 19)"

occ config:system:set maintenance_window_start --type=integer --value=19

echo "==> Running mimetype migration (include expensive)"

occ maintenance:repair --include-expensive

echo "==> Adding missing database indices"

occ db:add-missing-indices

echo "==> Done. Nextcloud maintenance tasks completed successfully."OCIS

Nextcloud实在是太重了,依赖PostgreSQL以及Redis,加上本上还是PHP的,搭建Nextcloud平台好用固好用,但是始终感觉对机器性能是一个不小的考验。

OCIS是Nextcloud的一个替代品,实际就是ownCloud的下一代网盘,它可以仅以单一的二进制启动;无需关系数据库配置、无需缓存数据库配置、微服务模式、Golang高效语言,等等拥有十分多的优点;当然缺点也有,即生态和文档都不齐全。

不过相较于它的优点我觉得还是十分有必要切换成OCIS,并且切换为OCIS后我就可以使用traefik作为网关(不用再考虑php-fpm的volume映射问题),这使得我可以从Nginx的配置中抽离出来,对整体的服务编排而言十分友好。

参考例子:Discover oCIS with Docker | ownCloud

配置生成

OCIS部署首先需要先生成一个配置文件,在service/app/ocis下执行命令:

sudo touch ocis.yaml && sudo chown 1000:1000 ocis.yaml && docker run --rm -it -v $(pwd)/ocis.yaml:/etc/ocis/ocis.yaml owncloud/ocis:latest init --force-overwrite输出应该如下:

evalexp@VM-8-6-debian:~/service/app/ocis$ sudo touch ocis.yaml && sudo chown 1000:1000 ocis.yaml && docker run --rm -it -v $(pwd)/ocis.yaml:/etc/ocis/ocis.yaml owncloud/ocis:latest init --force-overwrite

Do you want to configure Infinite Scale with certificate checking disabled?

This is not recommended for public instances! [yes | no = default] yes

=========================================

generated OCIS Config

=========================================

configpath : /etc/ocis/ocis.yaml

user : admin

password : ???

=========================================

An older config file has been backuped to

/etc/ocis/ocis.yaml.2025-07-31-15-15-16.backup注意这里选择输入yes,不要检查证书;本身就是在Nginx后面的;然后里面也会有输出对应的密码,当然在配置文件中也有。

插件安装

配置文件生成后不需要修改,接下来安装核心插件GitHub - mschlachter/ocis-app-tokens: This plugin for ownCloud Infinite Scale enables a UI to create and manage app tokens, which enable third-party apps to connect to Infinite Scale.

执行命令:

mkdir plugins

curl -Lo ocis-app-tokens.zip https://github.com/mschlachter/ocis-app-tokens/releases/download/v0.0.4/ocis-app-tokens-v0.0.4.zip

unzip ocis-app-tokens.zip -d plugins/ocis-app-tokens && rm ocis-app-tokens.zip

sudo chown -R 1000:1000 plugins此时的目录结构应该是这样:

.

├── docker-compose.yml

├── ocis.yaml

└── plugins

└── ocis-app-tokens

├── index.js

├── js

│ └── chunks

│ └── App-DwLmhITC.mjs

└── manifest.json

5 directories, 5 filesdocker部署

Compose如下:

services:

ocis:

image: owncloud/ocis:latest

restart: unless-stopped

container_name: ocis

networks:

app_net:

logging:

options:

max-size: "5m"

volumes:

- /datapool/encryption/ocis_data:/var/lib/ocis

- ./ocis.yaml:/etc/ocis/ocis.yaml

- ./plugins:/web/plugins

environment:

OCIS_INSECURE: "true"

OCIS_URL: "https://ocis.evalexp.top"

OCIS_LOG_LEVEL: error

OCIS_ADD_RUN_SERVICES: "auth-app"

PROXY_ENABLE_APP_AUTH: "true"

WEB_ASSET_APPS_PATH: "/web/plugins"

PROXY_TLS: "false"

labels:

- "traefik.enable=true"

- "traefik.http.routers.ocis.rule=Host(`ocis.evalexp.top`)"

- "traefik.http.routers.ocis.service=ocis"

- "traefik.http.services.ocis.loadbalancer.server.port=9200"

- "traefik.http.routers.ocis.entrypoints=https"

- "traefik.http.routers.ocis.tls=true"注意这里的环境变量都是必设的,其中OCIS_ADD_RUN_SERVICES和PROXY_ENABLE_APP_AUTH是为了能够使用Auth-App进行WebDav认证,这是为了TrueNAS主动备份的,必须设置。

注意这里的数据文件夹放到了ZFS池中;数据文件的配置优先于配置文件的配置,所以如果密码错误,先看看数据文件是不是空的。

同步相关

另外,请注意使用TrueNAS同步时,WebDav地址比较复杂,必须复制粘贴,尽量不要使用/dav/files/admin/进行同步,这个Endpoint的速度比使用UUID索引的速度慢。

这个链接看起来像这样:https://ocis.evalexp.top/dav/spaces/dfd54c02-dd89-4fab-8018-a175d7252950$c812b03e-5034-49b2-866c-77358187351a

这个Endpoint也是ownCloud的默认Endpoint

IPFS Reverse Proxy

对于Traefik而言,如果不能使用Docker的Label进行自动发现,譬如,希望反向代理IPFS,这个时候我们需要添加配置,推荐使用Dynamic File Provider;以下为IPFS示例:

http:

routers:

ipfs:

rule: "Host(`tools.evalexp.top`) && PathPrefix(`/ipfs`)"

entryPoints:

- https

service: ipfs

middlewares:

- rewrite-ipfs

tls: {}

middlewares:

rewrite-ipfs:

replacePathRegex:

regex: "^/ipfs/(.*)"

replacement: "/ipfs/${1}"

services:

ipfs:

loadBalancer:

servers:

- url: "https://ipfs.io"

passHostHeader: false这里的中间件可以直接删除,无用。

反代非本地服务

事实上上面的IPFS Reverse Proxy本身就是一个非本地服务的反向代理,并且添加了一个中间件。

放在内网环境中,如果需要代理一个https服务,但是这个TLS证书是自签名的怎么办?如果使用上面的模板,直接改一下url,会发现出现500状态码,这核心原因是由于Traefik无法验证PVE的证书导致的。

而在内网环境中,我们的证书通常由内网的CA颁发,域内的CA的根证书默认不会被Traefik信任,如果为了解决这个问题让Traefik去信任这个证书其实比较麻烦,而且是侵入了系统层面的配置,不是很推荐。

好在Traefik在http下提供了一个serversTransports子项,可以用于添加transport的相关配置,而PVE就可以通过配置transport跳过证书验证环节来使得Traefik直接信任其自签名证书:

http:

routers:

pve:

rule: "Host(`pve.evalexp.top`) || Host(`pve`) || Host(`pve.home.net`)"

entryPoints:

- https

service: pve

tls: {}

services:

pve:

loadBalancer:

servers:

- url: "https://192.168.31.3:8006"

passHostHeader: false

serversTransport: pve-transport

serversTransports:

pve-transport:

insecureSkipVerify: trueHeadscale

整体上来说把仓库中的配置文件exmaple拿下来移除TLS相关的,把对应的服务器地址改一下,对应的监听地址改一下即可。

Compose如下:

services:

headscale:

image: headscale/headscale

restart: unless-stopped

container_name: headscale

volumes:

- ./config.yaml:/etc/headscale/config.yaml

- /etc/timezone:/etc/timezone:ro

- /etc/localtime:/etc/localtim:ro

- /datapool/encryption/headscale_data:/var/lib/headscale

ports:

- "3478:3478"

- "3478:3478/udp"

command: serve

networks:

- app_net

labels:

- "traefik.enable=true"

- "traefik.http.routers.headscale.rule=Host(`headscale.evalexp.top`)"

- "traefik.http.routers.headscale.service=headscale"

- "traefik.http.services.headscale.loadbalancer.server.port=8080"

- "traefik.http.routers.headscale.entrypoints=https"

- "traefik.http.routers.headscale.tls=true"

# CORS Middleware Configuration

- "traefik.http.middlewares.headscale-cors.headers.accessControlAllowMethods=GET,POST,PUT,PATCH,DELETE,OPTIONS"

- "traefik.http.middlewares.headscale-cors.headers.accessControlAllowHeaders=Authorization,Content-Type"

- "traefik.http.middlewares.headscale-cors.headers.accessControlAllowOriginList=https://headscale.evalexp.top"

- "traefik.http.middlewares.headscale-cors.headers.accessControlMaxAge=100"

# Attach Middleware to Router

- "traefik.http.routers.headscale.middlewares=headscale-cors"

headscale-admin:

restart: always

container_name: headscale-admin

image: goodieshq/headscale-admin:latest

networks:

- app_net

labels:

- "traefik.enable=true"

- "traefik.http.routers.headscale-admin.rule=Host(`headscale.evalexp.top`) && PathPrefix(`/admin`)"

- "traefik.http.routers.headscale-admin.service=headscale-admin"

- "traefik.http.services.headscale-admin.loadbalancer.server.port=80"

- "traefik.http.routers.headscale-admin.entrypoints=https"

- "traefik.http.routers.headscale-admin.tls=true"同时加一个cli:

#!/bin/bash

docker exec -it headscale headscale "$@"注意这里还创建了一个面板;由于面板的规则更严苛会优先匹配。

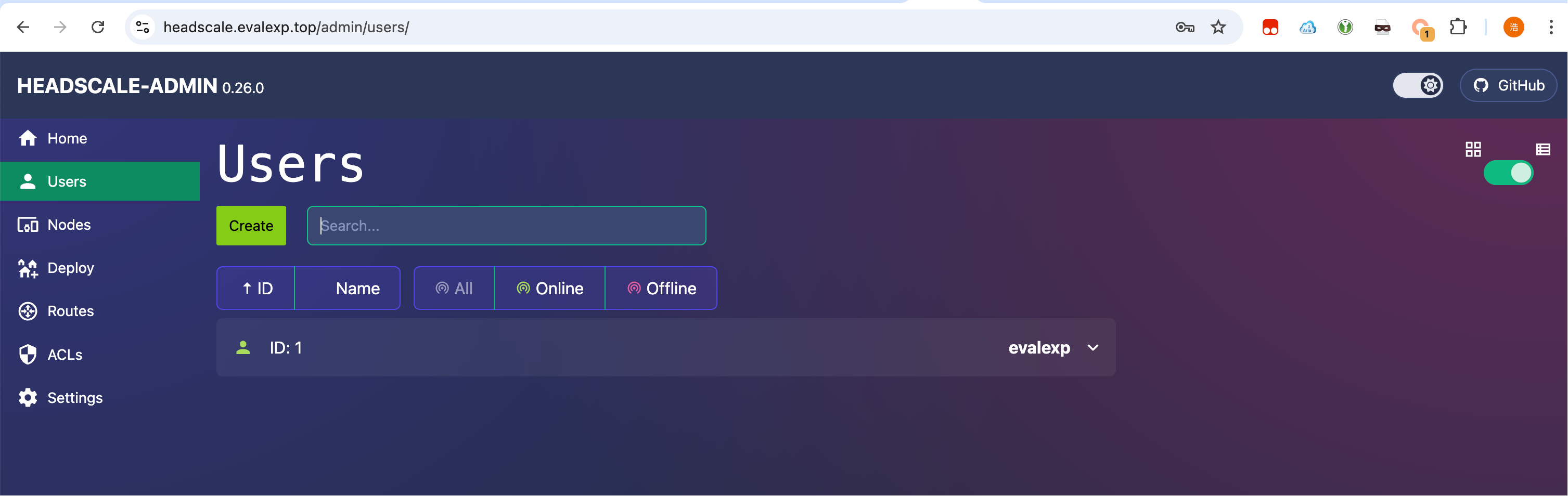

用户创建

evalexp@VM-8-6-debian:~/service/app/headscale$ ./headscale-cli.sh users create evalexp

User createdAPI密钥创建

evalexp@VM-8-6-debian:~/service/app/headscale$ ./headscale-cli.sh apikeys create

kJejtJR.xxx-jwrz

evalexp@VM-8-6-debian:~/service/app/headscale$ ./headscale-cli.sh apikeys list

ID | Prefix | Expiration | Created

1 | kJejtJR | 2025-11-25 03:50:01 | 2025-08-27 03:50:01

面板搭建

将上面的api密钥填到管理面板里即可使用:

面板可以快速帮我们创建预认证密钥等;建议在使用后删除该API密钥,提升安全性。

接入子网

接下来考虑家中的NAS接入子网,compose如下:

services:

tailscale:

image: ghcr.io/tailscale/tailscale:latest

container_name: headscale

volumes:

- ./data:/var/lib/tailscale

restart: always

network_mode: host

environment:

- TS_HOSTNAME=subnet-router

- TS_STATE_DIR=/var/lib/tailscale

- TS_AUTHKEY=???

- TS_ROUTES=192.168.31.0/24

- TS_EXTRA_ARGS=--login-server=https://headscale.evalexp.top注意这里其实和官方的tailscale接入的区别只需要添加TS_EXTRA_ARGS,指定到我们的headscale即可。

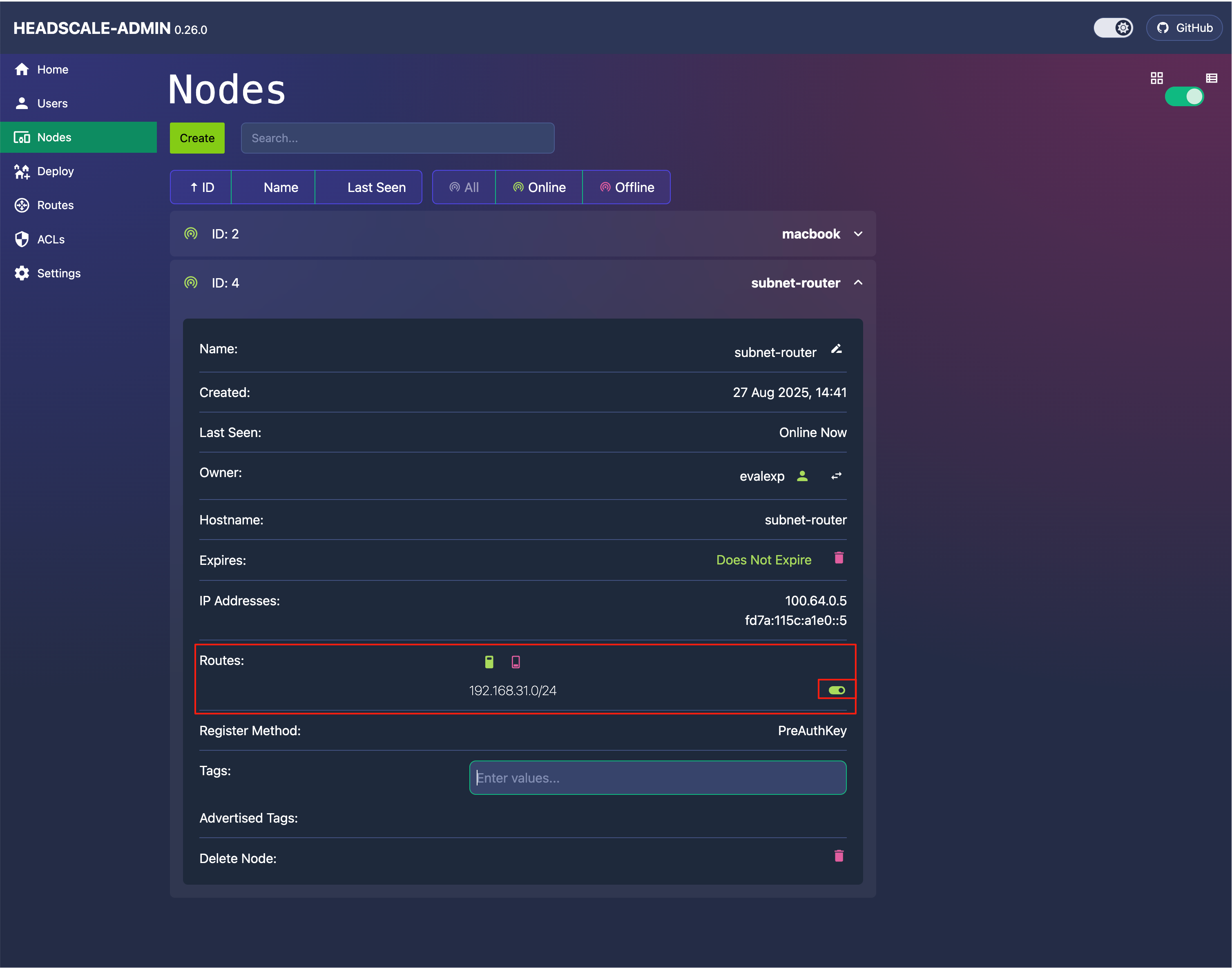

接入后我们批准这个子网,通过命令方式的话:

evalexp@VM-8-6-debian:~/service/app/headscale$ ./headscale-cli.sh nodes list-routes

ID | Hostname | Approved | Available | Serving (Primary)

4 | subnet-router | | 192.168.31.0/24 | 我们可以看到接入设备ID 4,名字为subnet-router提供了192.168.31.0/24的子网接入:

evalexp@VM-8-6-debian:~/service/app/headscale$ ./headscale-cli.sh nodes approve-routes -i 4 --routes 192.168.31.0/24

Node updated

evalexp@VM-8-6-debian:~/service/app/headscale$ ./headscale-cli.sh nodes list-routes

ID | Hostname | Approved | Available | Serving (Primary)

4 | subnet-router | 192.168.31.0/24 | 192.168.31.0/24 | 192.168.31.0/24 这个时候我们就可以正常进入这个子网了。

当然用面板更快:

打开即可批准此子网接入。

DNS解析

由于默认的配置文件在DNS的解析上选用了1.1.1.1,这个DNS在国内其实使用体验很差,因此需要切换到国内的DNS解析,否则在开启Tailscale后总会出现一些网站打不开的情况;因此将配置中的1.1.1.1的优先级调低,添加国内快速的DNS:

# List of DNS servers to expose to clients.

nameservers:

global:

- 223.5.5.5

- 119.29.29.29

- 2400:3200::1

#- 2402:4e00::

- 1.1.1.1

#- 1.0.0.1

- 2606:4700:4700::1111

- 2606:4700:4700::1001此外,在内网环境中,使用OpenWRT的hosts功能已经可以解决在家内网环境中的一下指定域名解析问题了;但是在外部通过Tailscale接入后,我们无法使用OpenWRT的解析功能,因此这里可以使用Tailscale的内置DNS功能配置;以我的堡垒机为例,堡垒机不配置DDNS,且通过Traefik进行反向代理,那么我就需要通过自定义解析域名来访问。

extra_records: #[]

- name: "jump.evalexp.top"

type: "A"

value: "192.168.31.8"配置以上额外的DNS记录,就可以在接入Tailscale后自动使用它的域名访问了。

不配置DDNS是为了安全考虑,但是由于AIO中的Traefik只有一个,因此本身可以通过Host碰撞来访问到堡垒机,如果此时堡垒机存在漏洞,那么风险性仍然很大;因此需要在Traefik的中间件上附加用于安全过滤的中间件,譬如只允许内网IP连接。

Portainer Agent

使用Portainer Agent可以在家中通过一个面板监控位于服务器上的Docker、NAS中所有的Docker,因此在香港的服务器上搭建Portainer Agent以提供Docker管控能力。

配置如下:

services:

portainer-agent:

image: portainer/agent:2.33.1

container_name: portainer-agent

restart: always

networks:

- app_net

volumes:

- /var/run/docker.sock:/var/run/docker.sock

- /var/lib/docker/volumes:/var/lib/docker/volumes

labels:

- "traefik.enable=true"

- "traefik.tcp.routers.portainer-agent.rule=HostSNI(`pa-01-hk.evalexp.top`) || HostSNI(`pa-01-hk`)"

- "traefik.tcp.routers.portainer-agent.entrypoints=https"

- "traefik.tcp.routers.portainer-agent.service=portainer-agent"

- "traefik.tcp.routers.portainer-agent.tls=true"

- "traefik.tcp.routers.portainer-agent.tls.passthrough=true"

- "traefik.tcp.services.portainer-agent.loadbalancer.server.port=9001"这里设置完成后,在Portainer的Environment中添加时填写pa-01-hk.evalexp.top:443链接到Portainer-Agent即可。

SubConverter

配置如下:

services:

subconverter:

container_name: subconverter

image: ghcr.io/metacubex/subconverter:latest

restart: always

networks:

- app_net

volumes:

- ./base:/base

logging:

options:

max-size: "5m"

labels:

- "traefik.enable=true"

- "traefik.http.routers.subconverter.rule=Host(`tools.evalexp.top`) && PathPrefix(`/******/******/`)"

- "traefik.http.routers.subconverter.service=subconverter"

- "traefik.http.services.subconverter.loadbalancer.server.port=25500"

- "traefik.http.routers.subconverter.entrypoints=https"

- "traefik.http.routers.subconverter.tls=true"

- "traefik.http.routers.subconverter.middlewares=subconverter-stripprefix"

- "traefik.http.middlewares.subconverter-stripprefix.stripprefix.prefixes=/******/******"

注意这里如果不希望别人使用的话,将PathPrefix可以调整得复杂一点。

添加自建节点

在base目录下,编辑pref.toml文件,在[common]下编辑以下配置:

# Insert subscription links to requests. Can be used to add node(s) to all exported subscriptions.

enable_insert = true

# URLs to insert before subscription links, can be used to add node(s) to all exported subscriptions, supports local files/URL

insert_url = ["vmess://eyJh...=="]

# Prepend inserted URLs to subscription links. Nodes in insert_url will be added to groups first with non-group-specific match pattern.

prepend_insert_url = true

# Exclude nodes which remarks match the following patterns. Supports regular expression.

exclude_remarks = ["(到期|剩余流量|时间|官网|产品)"]自定义配置

有些时候不希望请求的url太长,可以自定义配置(base/profiles/clash.ini):

[Profile]

;This is an example profile for the /getprofile interface

;The options works the same as the arguments in the /sub interface

;Arguments that needed URLEncode before is not needed here

;For more available options, please check the readme section

target=clash

url=

exclude=(到期|剩余流量|时间|官网|产品|平台|没有选择|域名|套餐|网址|教程|客户端|如果只显示此节点|更新订阅)

config=https://raw.githubusercontent.com/evalexp/selfrules/refs/heads/main/Clash/config/Online.ini

filename=Clash Merge All

;config=config/example_external_config.ini

;ver=3

;udp=true

;emoji=false

接下来只需要请求/getprofile?name=profiles/clash.ini就可以了。

Watchtower

核心是监控开源工具类服务和博客是否更新,如果更新就自动拉镜像更新容器,配置如下:

services:

watchtower:

image: containrrr/watchtower:latest

container_name: watchtower

restart: always

networks:

app_net:

# ipv4_address: 172.20.10.251

volumes:

- /var/run/docker.sock:/var/run/docker.sock

- /home/evalexp/.docker/config.json:/config.json:ro

command: --interval 30 cyberchef blog

logging:

options:

max-size: "5m"

注意在command中写监控的容器。

Rustdesk

直接参考官方的教程,配置如下:

services:

rustdesk-hbbs:

container_name: rustdesk-hbbs

image: ghcr.io/rustdesk/rustdesk-server:latest

command: hbbs

volumes:

- ./data:/root

network_mode: "host"

depends_on:

- rustdesk-hbbr

restart: unless-stopped

rustdesk-hbbr:

container_name: rustdesk-hbbr

image: ghcr.io/rustdesk/rustdesk-server:latest

command: hbbr

volumes:

- ./data:/root

network_mode: "host"

restart: unless-stopped

不推荐再自建了,21116端口国内封禁情况比较严重,使用体验拉的不行了。

Cyberchef

配置如下:

services:

cyberchef:

image: ghcr.io/gchq/cyberchef:latest

restart: always

container_name: cyberchef

networks:

- app_net

logging:

options:

max-size: "5m"

labels:

- "traefik.enable=true"

- "traefik.http.routers.cyberchef.rule=Host(`tools.evalexp.top`) && PathPrefix(`/cyberchef`)"

- "traefik.http.routers.cyberchef.service=cyberchef"

- "traefik.http.services.cyberchef.loadbalancer.server.port=80"

- "traefik.http.routers.cyberchef.entrypoints=https"

- "traefik.http.routers.cyberchef.tls=true"

- "traefik.http.routers.cyberchef.middlewares=cyberchef-redirect,cyberchef-stripprefix"

- "traefik.http.middlewares.cyberchef-stripprefix.stripprefix.prefixes=/cyberchef"

- "traefik.http.middlewares.cyberchef-redirect.redirectregex.regex=^https://(.*)/cyberchef$$"

- "traefik.http.middlewares.cyberchef-redirect.redirectregex.replacement=https://$${1}/cyberchef/"

- "traefik.http.middlewares.cyberchef-redirect.redirectregex.permanent=true"

Sablier

注意使用这个就不能再使用docker label自动配置了,孰优孰劣自行判断,如果觉得资源不足可以上那就上,否则不建议上,使用nginx更好。

在服务器上部署了比较多的服务,对于日常用不到的服务,可以直接先停止,等到需要时再启用,节约资源,使得其他长驻服务流畅运行。

这里初步考虑可以停服在需求时自启动的服务有:

- Headscale Admin

- Cyberchef

Sablier安装

首先先启动Sablier,使用docker配置:

services:

sablier:

image: sablierapp/sablier:1.10.1

command:

- start

- --provider.name=docker

networks:

- app_net

volumes:

- '/var/run/docker.sock:/var/run/docker.sock'

Traefik插件

然后我们还需要修改Traefik的命令行,在docker命令行中添加两行:

--experimental.plugins.sablier.modulename=github.com/sablierapp/sablier

--experimental.plugins.sablier.version=v1.10.1Traefik动态配置 - 分离式

再为sablier添加一个Traefik的Dynamic配置:

http:

middlewares:

my-sablier:

plugin:

sablier:

group: default

dynamic:

displayName: My Title

refreshFrequency: 5s

showDetails: "true"

theme: hacker-terminal

sablierUrl: http://sablier:10000

sessionDuration: 1m接下来为需要自动启停的服务接入sablier,以headscale-admin为例,首先将traefik的label转换为dynamic配置:

http:

routers:

headscale-admin:

rule: "Host(`headscale.evalexp.top`) && PathPrefix(`/admin`)"

entryPoints:

- https

service: headscale-admin

tls: {}

services:

headscale-admin:

loadBalancer:

servers:

- url: "http://headscale-admin:80"

接着我们只需要再routers下面在中间件的最前面添加一个my-sablier@file即可:

http:

routers:

headscale-admin:

rule: "Host(`headscale.evalexp.top`) && PathPrefix(`/admin`)"

entryPoints:

- https

service: headscale-admin

middlewares:

- my-sablier@file

tls: {}

services:

headscale-admin:

loadBalancer:

servers:

- url: "http://headscale-admin:80"到这里还没结束,我们还需要为容器添加标签让sablier接入这个容器:

headscale-admin:

restart: always

container_name: headscale-admin

image: goodieshq/headscale-admin:latest

networks:

- app_net

labels:

- "sablier.enable=true"

- "sablier.group=default"

#- "traefik.enable=true"

#- "traefik.http.routers.headscale-admin.rule=Host(`headscale.evalexp.top`) && PathPrefix(`/admin`)"

#- "traefik.http.routers.headscale-admin.service=headscale-admin"

#- "traefik.http.services.headscale-admin.loadbalancer.server.port=80"

#- "traefik.http.routers.headscale-admin.entrypoints=https"

#- "traefik.http.routers.headscale-admin.tls=true"

这里的group要和前面创建的dynamic配置对应。

Traefik动态配置 - 一体式

同时一个group的在启动时会全部启动,关闭也会全部关闭,所以不存在依赖关系的服务建议单独一个group;也推荐直接将sablier的中间件直接定义在每个服务的dynamic配置中,这样会比较方便修改;我们以blog为例,可以直接写成:

http:

routers:

blog:

rule: "Host(`blog.evalexp.top`) || Host(`evalexp.top`)"

entryPoints:

- https

service: blog

middlewares:

- blog-sablier

- blog-cors-middleware

tls: {}

middlewares:

blog-cors-middleware:

headers:

accessControlAllowMethods:

- GET

- OPTIONS

- PUT

accessControlAllowHeaders: "*"

accessControlAllowOriginList:

- https://blog.evalexp.top

- https://evalexp.top

accessControlMaxAge: 100

addVaryHeader: true

blog-sablier:

plugin:

sablier:

group: blog

dynamic:

displayName: Wait for blog start

refreshFrequency: 5s

showDetails: "true"

theme: hacker-terminal

sablierUrl: http://sablier:10000

sessionDuration: 1m

services:

blog:

loadBalancer:

servers:

- url: "http://blog:80"然后在Docker Label上启用并归属到blog组即可。